Neuroscientists Create New Algorithm for Better Decoding Brain Signals, with Applications Across Industries

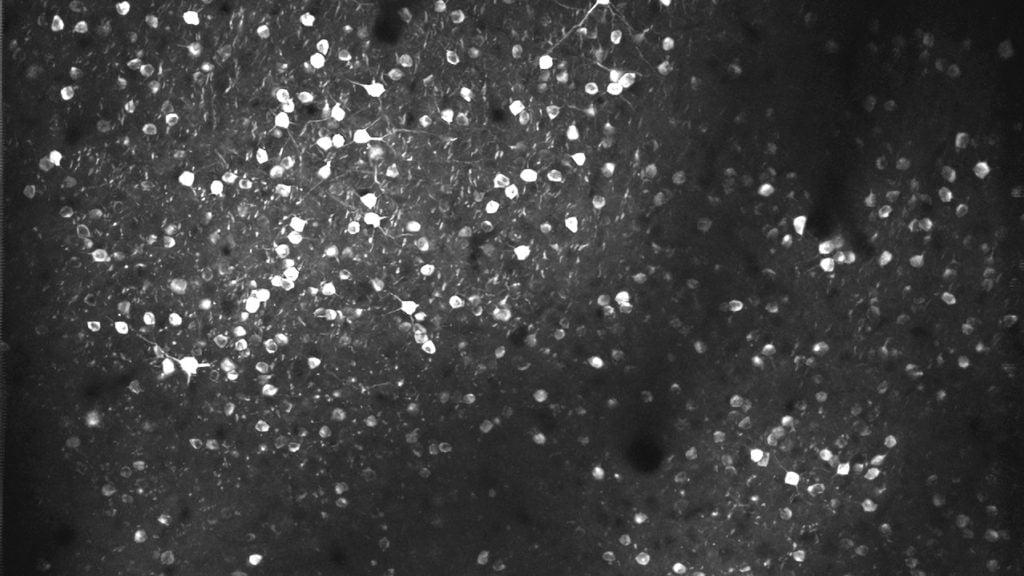

Matthew Kaufman studies neural activity and develops new mathematical methods to understand how brain areas generate the signals needed to command behavior. Seen here are several hundred neurons in the brain of an awake mouse.

Matthew Kaufman, assistant professor at the University of Chicago in the Department of Organismal Biology and Anatomy and the Neuroscience Institute, has developed a new algorithm with myriad potential applications in robotics, aerospace, healthcare, and more.

Broadly, Kaufman’s research – both experimental and theoretical – is interested in how neurons in the brain implement decision making and motor control.

“Every time you act on the world, it is through movement,” said Kaufman. “This is really fundamental to being the kind of animal we are, and it feels easy, but it is a remarkably complicated thing and involves many parts of the brain.”

To better understand what happens in the brain researchers can “eavesdrop” on what some of the brain’s neurons are doing, but the output is in “their language” – and understanding what it means requires a lot of math and theory.

A microscope used to image neural activity.

Neurons communicate through pulses, called spikes, and the spiking of neurons isn’t consistent for the same behaviors, which makes it difficult to see through the “noise” to accurately measure and analyze what is happening in the larger brain circuit.

One approach to solve this inference problem is the widely-used Kalman Filter algorithm, which helps estimate and predict the underlying state of various systems from noisy or indirect measurements. It leverages the fact that systems tend to behave smoothly not erratically, and is used across various industries in applications such as navigation, tracking, and autonomous vehicle control. For neuroscientists, it is useful for brain machine interfaces, devices through which a person with paralysis can control a computer or robotic limb.

“There have been many ways that people have tried to improve the properties of the Kalman Filter, and it has improved, but there are still fundamental limitations,” explained Kaufman, who has developed a new algorithm that overcomes many of these challenges.

Invented with a former undergraduate, David Sabatini, and patented by the Polsky Center for Entrepreneurship and Innovation, the new algorithm – Decoding using Manifold Neural Dynamics, or DeMaND – uses a two-step procedure to decode signals. First, it learns a map of the “rules” of how the recorded signals, such as neurons’ activity, evolve. Then, it uses this map and its understanding of the system to see through the noise and decode the signals of interest. This approach allows for a more flexible model while requiring less training data.

Though originally developed for use in decoding signals from brains, DeMaND could be used in many applications that currently use the Kalman Filter. It’s particularly suited to systems with complex, nonlinear rules.

“There are lots of applications in aerospace, robotics, maybe in financial forecasting, or even cardiology, where you are trying to infer what the heart is doing from indirect measurements,” said Kaufman. “There are a whole host of places where the measurement you get is indirectly related to what you really want to know, and the underlying system is complicated.” And this is where DeMaND has the upper hand.

“If you can do better inference from your sensors, you can engineer a physical system differently because you’re not stuck controlling it based on simple models,” explained Kaufman. For example, in the aerospace industry, fly-by-wire systems, which use Kalman filters to compensate for errors during flight, made it possible to build faster and lighter aircraft. “You can make different tradeoffs in how you physically build something when your control algorithms are more sophisticated,” he added.

Importantly, the algorithm also requires far less compute power to run than many other algorithms, such as neural networks, making it more cost-efficient and better for the environment. Neural networks also are often thought of as “black boxes.”

“There’s been progress, but we still don’t generally understand how a given neural network works,” he said. With DeMaND, it’s not a black box. “You don’t have to worry so much about an unforeseen circumstance making it go haywire. You can examine how it will handle edge cases and decide what you want it to do. With neural networks, you never really know. You can run it through extreme examples but you still may encounter cases that aren’t what it was trained for and get a disaster. Our system doesn’t have that problem.”

Though Kaufman said he is never trying to invent something with the goal of patenting, he knew the tool would be valuable to other people. And that’s clear. Said Kaufman, “If you want to see something used, it makes sense to protect the IP and license it.”

INTERESTED IN THIS TECHNOLOGY? Contact Harrison Paul, who can provide more detail, discuss the licensing process, and connect you with the inventor.

// Polsky Patented is a column highlighting research and inventions from University of Chicago faculty. For more information about available technologies, click here.